Some of you have curiously asked me and Emil what is actually done by the backend. Is anything done at all or do we simply just fake it?

I made that last part up just about now. Everyone seems to think that me and Emil are honest guys who have developed something that works for once. And that is actually true! What is also true is the horrendous rumors saying the entire backend is implemented in Bash. How did THAT happen?

Well, Bash is rather nice when you want to script some minor repetitive tasks to be automated for you. If you are careful to structure your scripts well it also ends up quite readable.

On the other hand this has gone way out of hands by now, and we have created a small but growing Bash monster.

The technical workflow

- You and your fellow friends and colleagues code and solve puzzles,

- You push your code to GitHub,

- Crontab is configured on our fancy server to trigger the script

aoc.shonce every hour, - Freshly updated code are pull from your GitHub repo,

- The code is analysed in various ways,

- Notifications are sent via Slack integrations,

- Data is generated for the frontend,

- The frontend is rebuilt and pushed to GitHub Pages,

- Voila! This site is updated with leaderboards and all.

The server runs the following command to start analysing your solutions.

$ aoc.sh -u users.json

with users.json holding all information needed about you as a participants. For example:

{

"users" : [

{

"username": "[email protected]",

"aoc_id": "1259675",

"repo": "https://github.com/emilb/aoc2021",

"name": "Emil Breding"

},

{

"username": "[email protected]",

"aoc_id": "1487429",

"repo": "https://github.com/partjarnberg/aoc2021",

"name": "Pär Tjärnberg"

}

]

}

Show me the code for backend!

Here is the main program triggered by crontab. Each part elaborated on separate sections.

#!/usr/bin/env bash

shopt -s expand_aliases

if [ -f ~/.bash_aliases ]; then

. ~/.bash_aliases

fi

source config/verifypreconditions.sh

source config/config.sh

source feature/spinner.sh

source feature/checkout.sh

source feature/executiontime.sh

source feature/completiondaytimestamp.sh

source feature/linguist/languageanalysis.sh

source feature/validate_solutions.sh

source feature/slack_publish_solution.sh

usage () { echo "HOW TO USE:

$ aoc.sh -u <json with users>

EXAMPLE:

$ aoc.sh -u users.json

"; }

if [[ $# -eq 0 ]]; then

usage; exit;

fi

while getopts ":u:h" option; do

case $option in

u ) users="$OPTARG";;

h ) usage; exit;;

\? ) echo "Unknown option: -$OPTARG" >&2; exit 1;;

: ) echo "Missing option argument for -$OPTARG" >&2; exit 1;;

* ) echo "Unimplemented option: -$OPTARG" >&2; exit 1;;

esac

done

spin &

SPIN_PID=$!

# Place all features for analyzing solutions here

checkout $users

get_leaderboard_json

execution_time $users

completion_day_timestamp $users

language_analysis $users

kill -KILL $SPIN_PID

Configurations

Verifying preconditions

The script starts by verifying its preconditions. The script requires support for associative arrays which was introduced in Bash version 4 and also utilize jq for parsing json and libpq as Postgres client.

Config

All credentials to integrate with Advent of Code REST API, Postgres database and Slack are all gathered here.

Also, datastructures shared between features are initialized in this configuration.

Features

Spinner

The most important feature is of course the spinner showing us the progress of the analysis when running it manually 😉

#!/usr/bin/env bash

function spin() {

spinner="/|\\-/|\\-"

while : ; do

for i in `seq 0 7`; do

echo -n "${spinner:$i:1}"

echo -en "\010"

sleep 1

done

done

}

Checkout

This feature is quite self-explanatory as it will pull the code from GitHub for each participant.

Validate solutions

Before any analysis is done the solutions are validated by checking if a correct solution have been acknowledged for the current participant via Advent of Code’s REST API.

#!/usr/bin/env bash

function get_leaderboard_json() {

if [ ! -f "${AOC_PRIVATE_LEADERBOARD_LOCAL_JSON}" ] || test "$(find ${AOC_PRIVATE_LEADERBOARD_LOCAL_JSON} -mmin +20)"; then

echo "Long time since update. Fetching leaderboard from AoC server."

mkdir -p data

touch "${AOC_PRIVATE_LEADERBOARD_LOCAL_JSON}"

curl -s --cookie "session=${AOC_SESSION_COOKIE}" "${AOC_PRIVATE_LEADERBOARD_URL}" -o "${AOC_PRIVATE_LEADERBOARD_LOCAL_JSON}"

fi

}

function validate_solution_for_user_day_part() {

GET_STAR_TIME_STAMP=$(jq -c ".members[] | select( .id==\"$1\") | .completion_day_level | .\"$2\" | .\"$3\"" $AOC_PRIVATE_LEADERBOARD_LOCAL_JSON)

#echo "GET_STAR_TIME_STAMP: ${GET_STAR_TIME_STAMP}"

if [ "${GET_STAR_TIME_STAMP}" != "null" ] && [ -n "${GET_STAR_TIME_STAMP}" ]; then

echo "valid"

else

echo "invalid"

fi

}

Execution Time

This feature is the reason why we all package our solutions in a Docker container. The server runs each solution and measure the execution time by spinning up each docker image. In our first naive approach we had no limitations whatsoever in a belief that no one would push long-running solutions.

It took about two days to prove us wrong when a brute force solution by a dear colleague caused our server to burn its CPU for about an hour or so.

At the moment the time cap is set to 15 seconds for your algorithm to have time to finish or the feature will abruptly end the current docker execution.

...

if [ "$part1" = "valid" ]; then

timeout 15s docker run --name $containerPart1 -e part=part1 "${dockerImage}" > /dev/null

fi

if [ "$part2" = "valid" ]; then

timeout 15s docker run --name $containerPart2 -e part=part2 "${dockerImage}" > /dev/null

fi

...

Completion Day Timestamp

This is the same measurement as the ordinary Advent of Code, and we steal it with pride from their REST API.

Language Analysis

This feature is based on Linguist used on GitHub to detect blob languages. We use a fork by Torbjörn Gannholm to also support Tailspin.

In addition to detect languages being used this feature also count lines of code of your solutions.

...

# Aggregate identified programming languages

declare -a identifiedLanguages

((linesOfCode=0))

while IFS= read -r -d '' file; do

...

analysis_result=$(docker run --rm -v "$realpathUserDir":/linguist-analyze/ -w /linguist-analyze linguist:aoc github-linguist $subFilePath --json)

detectedLang=$(echo $analysis_result | jq '.["'"$subFilePath"'"].language' | sed -e 's/"//g')

countedLines=$(echo $analysis_result | jq '.["'"$subFilePath"'"].sloc' | sed -e 's/"//g')

...

identifiedLanguages+=("${detectedLang}")

((linesOfCode+=countedLines))

...

done

...

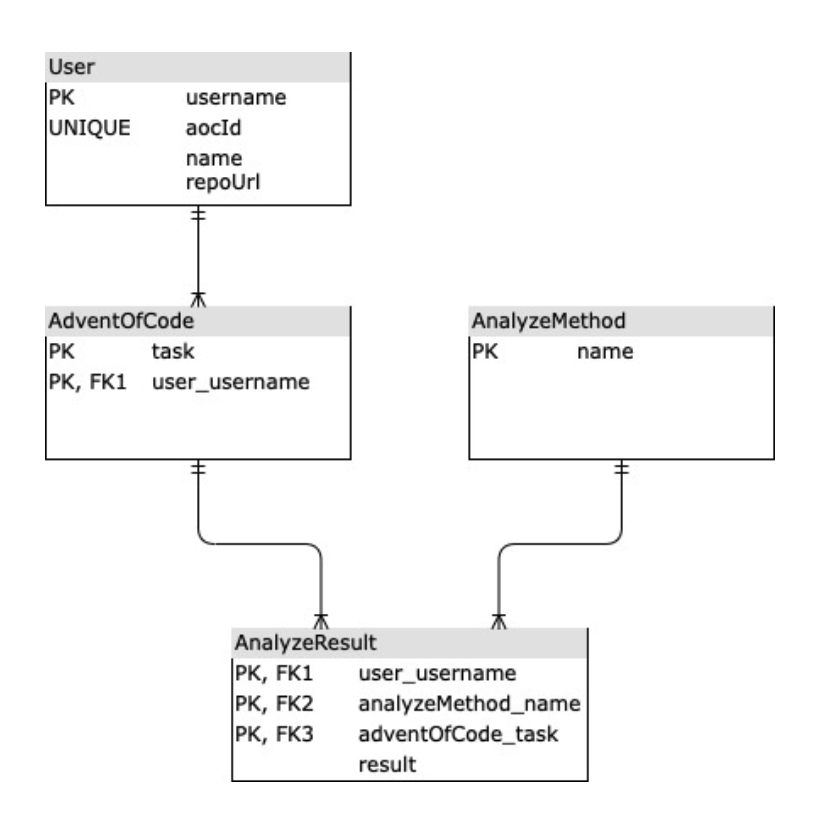

Postgres to keep state

All the following features write their analysis result to a PostgreSQL database saving current state. And for the frontend to use when backing up various leaderboards with data.

- Execution time

- Lines of code

- Language analysis

- Completion day timestamp

The analysis is somewhat sophisticated and will only analyse solutions if a change is detected. That is the same solution will not be analysed several times a day unless you do some code changes.

NEW FEATURE – Slack notifications

This year we also have a couple of Slack integrations embedded into the analysis which we hope will bring extra joy to the event!

And that is it!

And that is it for the backend. Did I mention there are no tests?! Not a single one whatsoever. 😬

Generate data for frontend

Neat and handy little scripts aggregates data stored in database and output json blobs for each leaderboard; execution_time.json,

lines_of_code.json, submission_time.json and programmings_languages.json. Also, the funfacts.json is generated by these scripts.

For example the execution_time.json looks something like this.

{

"execution_time": {

"enable": true,

"title": "Execution time",

"execution_time_leaderboard": [

{

"name": "Emil Breding",

"task": "day01",

"execution_time_ms_part1": 35,

"execution_time_ms_part2": 40,

"execution_time_sum": 75,

"execution_time_average": 37.5,

"programing_Languages": [

"JavaScript"

],

"repo_url": "https://github.com/emilb/aoc2021/tree/main/day01"

},

{

"name": "Pär Tjärnberg",

"task": "day01",

"execution_time_ms_part1": 24,

"execution_time_ms_part2": null,

"execution_time_sum": null,

"execution_time_average": null,

"programing_Languages": [

"Java"

],

"repo_url": "https://github.com/partjarnberg/aoc2021/tree/main/day01"

},

{

"name": "Emil Breding",

"task": "day02",

"execution_time_ms_part1": 35,

"execution_time_ms_part2": 34,

"execution_time_sum": 69,

"execution_time_average": 34.5,

"programing_Languages": [

"JavaScript"

],

"repo_url": "https://github.com/emilb/aoc2021/tree/main/day02"

}

]

}

}

Rebuild frontend and deploy

We use Hugo as frontend and trigger a rebuild of the entire static site by running hugo -t meghna --config config-prod.toml

followed by adding, commiting and pushing to our main branch on GitHub.

This will cause the docs folder to be updated. And that folder is linked to GitHub Pages for aoc-2022 repo.

Voila! This site is updated with leaderboards and all. 🎉🥳🎁🎄

Finally

Best wishes and happy coding!

/Pär